Why Anthropic’s “Skills” quietly changed how agents should work

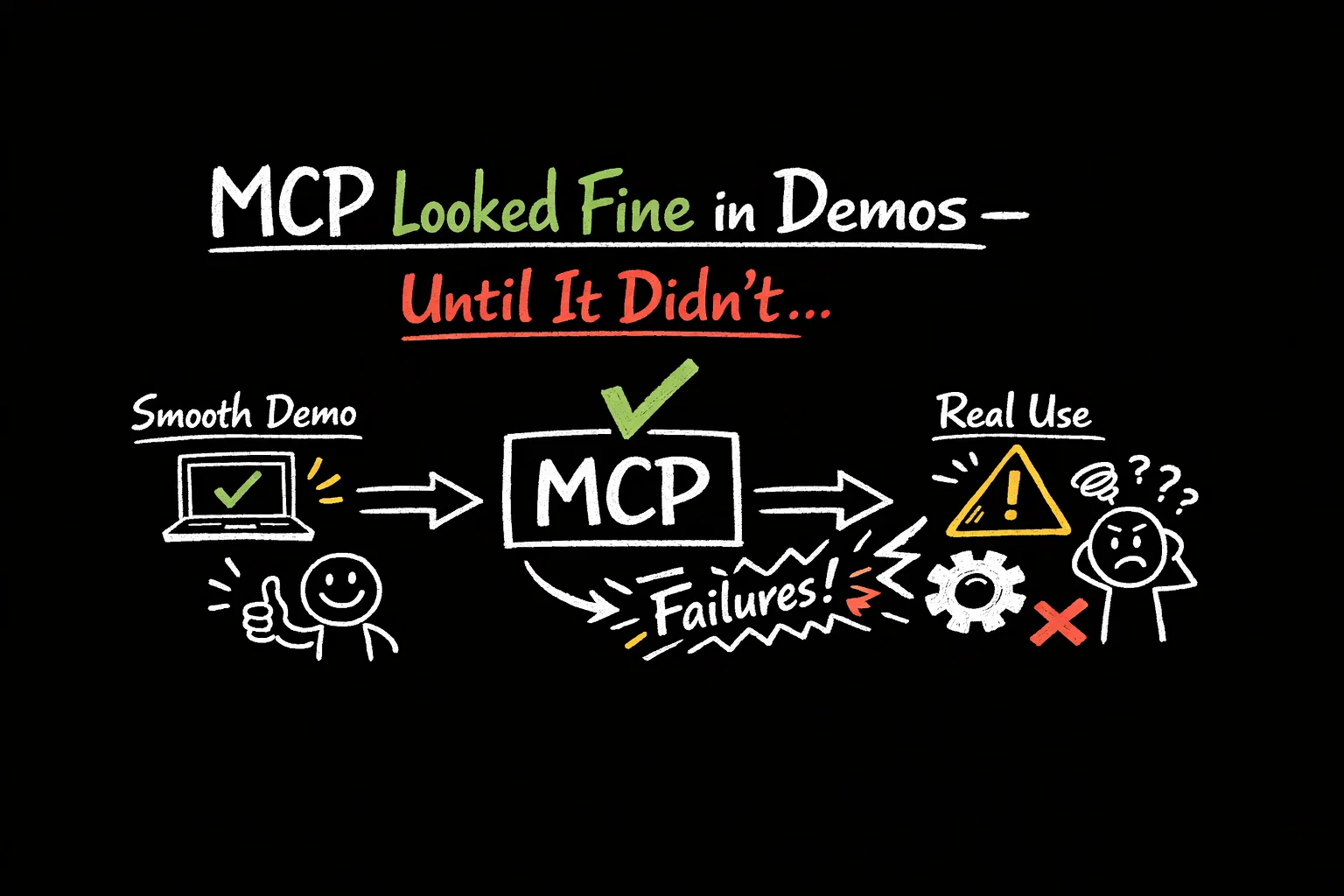

Let’s be honest for a second.

MCP looks great in demos.

It feels clean. Structured. Powerful.

And then you try to use it in a real system.

Suddenly things start to wobble.

Tools get confused.

Context fills up fast.

Workflows drift instead of finishing strong.

If you’ve been there, don’t worry.

See also: Mastering the Linux Command Line — Your Complete Free Training Guide

You’re not doing it wrong.

This is exactly the moment Anthropic noticed too — and it explains why Skills exist.

Table of Contents

The Hidden Cost of “Just Add More Tools”

On paper, MCP is simple.

You run one or more MCP servers.

Each server exposes tools.

Each tool has a schema.

The client loads all of that into the model’s context.

In theory, it’s elegant.

A typed bridge between language models and the real world.

In practice, it’s… a lot.

One GitHub MCP server can expose 90+ tools.

That easily turns into tens of thousands of tokens of schemas and descriptions.

And all of that lands in the context window before the model even thinks about your task.

It doesn’t matter if you only want to summarize a paragraph.

The model still has to scan the entire tool universe first.

That’s where things start to break down.

When Accuracy Compounds in the Wrong Direction

Tool accuracy doesn’t fail all at once.

It erodes step by step.

If one tool choice is 90% reliable, five chained decisions drop you far lower than you expect.

Anyone who’s read community feedback knows the pattern:

The first step works.

The second step works.

And somewhere later… things quietly go sideways.

Parameters look valid, but logic is wrong.

Earlier constraints get forgotten.

The model isn’t “broken” — it’s overloaded.

I’ve seen this happen in production systems.

The task is clear.

The tools are correct.

The failure comes from cognitive overload.

MCP doesn’t just expose tools.

It exposes everything, all at once.

The Real Issue Was Never MCP Itself

Here’s the important part.

Anthropic didn’t try to “fix” MCP by adding more rules or guardrails.

They didn’t publish a dramatic critique.

They didn’t declare anything obsolete.

Instead, they changed how the model encounters MCP.

That’s where Skills come in.

Skills Flip the Flow — Carefully and Quietly

A Skill is simply a folder.

Inside it, there’s a SKILL.md file.

At the top, you get lightweight metadata: name, description, tags.

Below that, you add instructions, references, and links to other files.

And here’s the key difference:

At startup, the model does not read every Skill in full.

It only sees the minimal metadata.

Just enough to answer one question:

“When might this be useful?”

Everything else stays offstage.

Progressive Disclosure, Not Context Dumping

When a user asks for something, the process unfolds step by step.

First, the model looks at Skill names and descriptions.

If one seems relevant, it opens that specific SKILL.md.

If that file links to other documents, only those are loaded — and only if needed.

If code is involved, it’s executed directly instead of being simulated through tokens.

Nothing extra enters the context by accident.

Context is layered.

Not dumped.

That’s the entire shift.

Why This Feels Different From Typical MCP Setups

A Skill can include a lot:

Markdown files

Worked examples

Reference tables

Even Python scripts that behave like small, reliable services

But none of that touches the context window until the model explicitly asks for it.

That’s the opposite of traditional MCP patterns.

And this isn’t a UI-only trick.

Skills work across apps, APIs, developer tools, and the Agent SDK.

They share the same execution environment.

They scale naturally.

This Is RAG — Just Applied to Tools

If you’ve built retrieval-augmented systems before, this should feel familiar.

You don’t load your entire knowledge base upfront.

You index it.

You retrieve only what matters.

Then you reason with that slice.

Skills apply the same idea to tools and procedures.

- Metadata acts as the index

- Skill documents are the retrieved content

- Instructions + code drive execution

- MCP tools handle final integrations

Instead of one massive tool universe, you get focused workflows.

What This Enables in Practice

Think about what you can bundle cleanly now:

A form-processing Skill that knows exactly which tools to call

An analytics Skill that crunches data locally before exporting results

A writing pipeline Skill built from your own examples and helper scripts

The model doesn’t need to know everything anymore.

It only needs to choose the right Skill.

The Skill handles the rest.

That’s RAG-MCP in action.

Why This Matters Beyond Demos

For small experiments, brute force works.

Short conversations hide the cracks.

But real systems are different.

Multi-tenant environments.

Long-lived threads.

Sensitive workflows.

Agents calling agents.

That’s where you start seeing:

Surprising tool choices

Valid-looking inputs that break business rules

Workflows that degrade over time as context fights itself

You can patch around this.

Trim schemas.

Split servers.

Add supervising agents.

But the core problem remains:

the model is overloaded before it even starts.

Skills Change the Mental Model

The shift is subtle but powerful.

From:

“The model must understand every tool.”

To:

“The model must choose the right Skill.”

That’s a much healthier way to build.

It mirrors how humans learn, too.

We don’t hand new teammates the entire wiki on day one.

We give them a guide — and point them to details when needed.

Skills formalize that pattern.

A Quiet but Important Direction Change

There’s something refreshing about how this was introduced.

No grand announcements.

No dramatic declarations.

No blame shifted to users.

Just a practical acknowledgement of reality:

Context is finite.

Tools are growing.

Agents need a better way to cope.

Skills are composable.

They’re portable.

They’re efficient.

And most importantly, they recognize that the future isn’t about piling on more tools.

It’s about relating to tools better.

Anthropic didn’t abandon MCP.

They made it survivable.

And if you’re building agents that need to do real work, that matters more than any big headline ever could.