For Linux enthusiasts and system administrators, a sluggish boot process can be a significant source of frustration, hindering productivity and indicating underlying system issues.

This in-depth guide will equip you with the knowledge and tools to diagnose and resolve the common culprits behind a slow-starting Linux system, transforming your boot experience from a crawl to a sprint.

Table of Contents

Understanding the Linux Boot Process

Before diving into troubleshooting, it’s essential to have a basic understanding of the Linux boot sequence.

In modern Linux distributions, systemd is the init system that manages the startup process. It’s responsible for launching services, mounting filesystems, and bringing the system to a fully operational state. The boot process can be broadly divided into several stages:

- Firmware (BIOS/UEFI): Initializes the hardware.

- Bootloader (GRUB): Loads the Linux kernel into memory.

- Kernel: Initializes core hardware and mounts the root filesystem.

- Init System (systemd): Starts system services and brings up the user session.

A delay in any of these stages can contribute to an overall slow boot time.

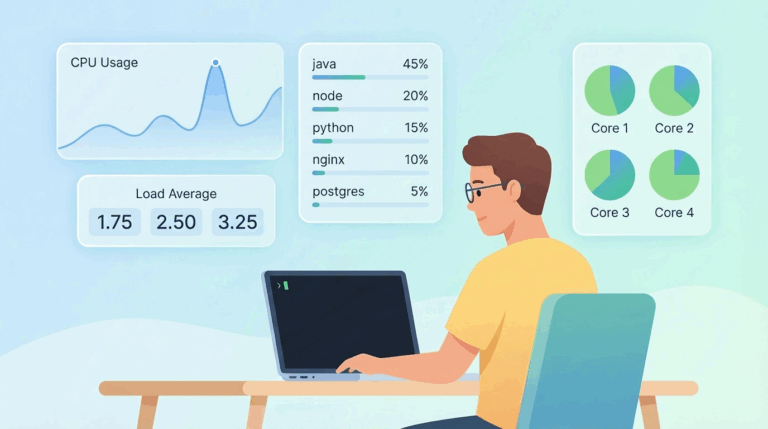

The Toolkit: Essential Commands for Boot Analysis

The key to resolving a slow boot is to first identify the bottleneck. Fortunately, Linux provides powerful built-in tools for this purpose, with systemd-analyze being the most crucial.

systemd-analyze: Your Primary Diagnostic Tool

This versatile command is the starting point for any boot time investigation.

- Overall Boot Time: To get a high-level overview of your boot time, simply run:

systemd-analyze - Identifying Slow Services with

blame: To pinpoint which services are taking the longest to start, use theblamesubcommand:systemd-analyze blame journalctl: The systemd journal can provide detailed logs that may reveal errors or timeouts during the boot process. To view logs for a specific service, use theuflag:journalctl -u <service-name>.

Case Study: Solving a Real-World 7+ Minute Boot Delay

Let’s apply these tools to a real-world example where a user reported “Startup is very slow”. This case perfectly illustrates the troubleshooting workflow from diagnosis to a definitive solution.

Step 1: Check the Overall Boot Time

The first command, systemd-analyze, immediately confirms the problem is severe.

[root@test002 ~]# sudo systemd-analyze

Startup finished in 1.351s (kernel) + 2.389s (initrd) + 7min 41.934s (userspace) = 7min 45.675s

multi-user.target reached after 6min 13.237s in userspace.

- Analysis: A total boot time of nearly 8 minutes is unacceptable. The problem lies entirely within the userspace phase, which is taking over 7 minutes and 41 seconds.

Step 2: Pinpoint the Slowest Service

Next, we use systemd-analyze blame to find the exact service causing the delay.

See also: Mastering the Linux Command Line — Your Complete Free Training Guide

[root@test002 ~]# sudo systemd-analyze blame

5min 59.740s cloud-init.service

16.129s osqueryd.service

9.724s cloud-init-local.service

...

- Analysis: The output is crystal clear. The

cloud-init.serviceis responsible for almost 6 minutes of the boot delay. We have found our culprit.

Step 3: Investigate the Service Logs

We now use journalctl to inspect the logs of cloud-init.service to find the root cause.

[root@test002 ~]# sudo journalctl -u cloud-init --no-pager

...

Oct 27 22:29:05 test002 cloud-init[1051]: ... url_helper.py[WARNING]: Calling '<http://169.254.169.254/>...' failed ... Connection to 169.254.169.254 timed out...

...

Oct 27 22:32:14 test002 cloud-init[1051]: ... DataSourceEc2.py[CRITICAL]: Giving up on md from ['<http://169.254.169.254/>...'] after 239 seconds

...

Oct 27 22:34:14 test002 cloud-init[1051]: ... DataSourceCloudStack.py[CRITICAL]: Giving up on waiting for the metadata from [...] after 119 seconds

...

- Analysis: The logs reveal the full story.

cloud-initis trying to connect to network services that are not available, leading to long timeouts. The total delay (~239s + ~119s) adds up to the ~6 minutes we saw earlier.

Digging Deeper: The “Why” Behind the cloud-init Delay

To implement the correct fix, we need to understand exactly what cloud-init was trying to do.

The Mystery of the IP Address: 169.254.169.254

You will not find the IP address 169.254.169.254 in a standard user configuration file. This address is hardcoded into the cloud-init source code because it is the industry-standard, link-local IP for the Amazon Web Services (AWS) EC2 metadata service.

This IP is defined as a constant within the Python source file for the EC2 datasource module, typically located at /usr/lib/python3/dist-packages/cloudinit/sources/DataSourceEc2.py.

The Probing Sequence: Why It Tried CloudStack Next

By default, cloud-init performs automatic datasource detection. It probes for a list of common cloud platforms until one responds. This list is defined in /etc/cloud/cloud.cfg under datasource_list. If the list is not defined, cloud-init uses a default internal sequence, which often includes “Ec2” and “CloudStack”.

In our case study, this is what happened:

- Probe for Ec2: It tried to contact the hardcoded AWS IP (

169.254.169.254). Since the server was not on AWS, the request timed out after 239 seconds. - Probe for CloudStack: After the Ec2 probe failed, it moved to the next datasource in its list,

CloudStack. It attempted to find a CloudStack metadata service, which also failed and timed out after 119 seconds.

The root cause is now perfectly clear: The system has cloud-init enabled, but it is not running in a cloud environment where it can find a metadata service, forcing it to wait through multiple, long network timeouts.

The Definitive Solution for the cloud-init Problem

Since the machine is not on a compatible cloud platform, the correct solution is to tell cloud-init to stop probing for network-based datasources. This is the most elegant and recommended fix.

- Create a new configuration file to override the default behavior.

sudo nano /etc/cloud/cloud.cfg.d/99-disable-network.cfg - Add the following content to this new file:

# Tell cloud-init that no network-based datasources should be used. datasource_list: [ None ]

This configuration explicitly instructs cloud-init to only consider the “None” datasource, completely skipping the time-consuming network probes for Ec2, CloudStack, and others. After a reboot, the cloud-init service will finish in milliseconds.

Other Common Causes of a Slow Boot

While the case study focused on a misconfigured service, other factors can also slow down your boot.

- Unnecessary Startup Services: Use

systemd-analyze blameto find other services you might not need (e.g.,bluetooth.serviceon a server) and disable them withsudo systemctl disable <service-name>. - Slow Hard Drives: Traditional hard disk drives (HDDs) are a major bottleneck. Upgrading to a solid-state drive (SSD) is the single most effective hardware upgrade for improving boot time and overall system performance.

- Filesystem Checks (fsck): If the

fsckservice is taking a long time on every boot, it may indicate that your system is not shutting down cleanly or has underlying disk errors. Investigate shutdown procedures and checkjournalctlfor disk-related errors. - GRUB Timeout: The GRUB bootloader menu introduces a brief, intentional delay. You can reduce this by editing

/etc/default/grub, changingGRUB_TIMEOUT=5toGRUB_TIMEOUT=1, and then runningsudo update-grub(Debian/Ubuntu) or the equivalent for your distribution. - Desktop Environment: Heavy desktop environments and numerous startup applications can significantly delay the appearance of a usable desktop. Review your startup applications and disable any you don’t need on login.

Linux Boot Time Troubleshooting Commands

| Tool/Purpose | Command | Description |

|---|---|---|

| systemd-analyze | systemd-analyze | Provides a high-level summary of the total boot time, broken down by firmware, loader, kernel, and userspace. |

| (Primary Boot Analysis) | systemd-analyze blame | Lists all running units, ordered by the time they took to initialize, to quickly identify the slowest services. |

systemd-analyze critical-chain | Shows the chain of dependencies that took the longest to complete, helping to identify bottlenecks in the boot sequence. | |

systemd-analyze plot > boot.svg | Generates a detailed graphical SVG chart visualizing the entire boot process from start to finish. | |

| journalctl | journalctl -b | Displays all system log messages from the current boot. |

| (System Journal Analysis) | journalctl -b -1 | Shows all log messages from the previous boot. |

journalctl -b -p warning | Filters the current boot log to show only messages with a priority of “warning” or higher (e.g., error, critical). | |

journalctl -u SERVICE_NAME.service -b | Isolates and displays all log messages for a specific service (e.g., cloud-init.service) from the current boot. | |

journalctl -b SYSLOG_IDENTIFIER=kernel | Shows only the kernel-specific messages (dmesg output) from the current boot log. | |

| dmesg | dmesg | Displays the kernel ring buffer, which contains driver and hardware-related messages from boot time. |

| (Kernel Message Analysis) | dmesg -T | Shows the kernel messages with human-readable timestamps. |

dmesg \| grep -i error | Filters kernel messages to search for lines containing “error” (case-insensitive). | |

| systemd-bootchart | systemd-bootchart | (If installed) Generates a graphical performance analysis chart of the boot process. |

| (Graphical Analysis) | ||

| Other Systemd & Timing Tools | time systemctl --state=loaded | Measures the time it takes for systemctl to report the state of all loaded units. |

| (Status & Timing Checks) | systemctl list-unit-files --state=enabled | Lists all services and other units that are configured to start automatically on boot. |

systemctl show -p ActiveEnterTimestamp | Shows the exact timestamp when a specific unit (or the main target) became active. | |

systemctl --failed | Quickly lists any services or units that failed to start during the boot process. |