Counting rows in a PostgreSQL table is one of the most common operations for database administrators, developers, and data analysts.

However, the “right” way to count rows depends entirely on your needs—speed vs. precision.

In this guide, we’ll break down all methods to count rows in PostgreSQL, explain their tradeoffs, and help you choose the best approach for your use case.

Table of Contents

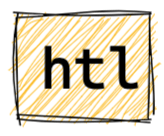

1. Exact Row Count with COUNT(*)

The COUNT(*) function is the standard way to get a 100% accurate row count for a table. It scans every row in the table to tally the total number of records.

Syntax & Examples

Basic Exact Count

-- Count all rows in the "users" table

SELECT COUNT(*) AS total_users FROM users;

-- Output

total_users

------------

2500782

Exact Count with Filter Conditions

Filter rows using a WHERE clause to count a subset of records—this is useful for targeted analysis (e.g., active users, completed orders).

-- Count active users (status = 1)

SELECT COUNT(*) AS active_users FROM users WHERE status = 1;

-- Count completed orders from 2025

SELECT COUNT(*) AS 2025_completed_orders

FROM orders

WHERE status = 'completed' AND order_date >= '2025-01-01';

-- Output for 2025 completed orders

2025_completed_orders

-----------------------

892456

Exact Count of Non-NULL Values

Use COUNT(column_name) instead of COUNT(*) to count only rows where the specified column has a non-NULL value.

-- Count users who provided a phone number (excludes NULLs)

SELECT COUNT(phone) AS users_with_phone FROM users;

-- Count distinct email domains (e.g., gmail.com, yahoo.com)

SELECT COUNT(DISTINCT SPLIT_PART(email, '@', 2)) AS unique_domains FROM users;

-- Output

unique_domains

---------------

42

Exact Count with JOINs

Count rows across multiple tables using joins—common for relational data (e.g., orders per customer).

-- Count total orders placed by users in the "USA" region

SELECT COUNT(o.order_id) AS usa_orders

FROM users u

JOIN orders o ON u.user_id = o.user_id

WHERE u.region = 'USA';

How It Works

PostgreSQL executes COUNT(*) by performing a sequential scan (or index scan, if optimized) of the entire table, counting each row one by one. For small tables, this is fast—but for large tables (millions/billions of rows), it can be extremely slow because it reads every row from disk.

Pros & Cons

| Pros | Cons |

|---|---|

| 100% accurate (no approximations) | Very slow for large tables (scans all rows) |

Works with filters, joins, and DISTINCT | Consumes significant I/O and CPU resources |

| Simple, standard SQL syntax | Blocks or is blocked by write operations (in some isolation levels) |

2. Fast Estimated Row Count with pg_class.reltuples

For most non-critical use cases (e.g., monitoring, reporting, or quick sanity checks), an estimated row count is sufficient—and it’s orders of magnitude faster than COUNT(*). The pg_class system catalog stores metadata about database objects, including reltuples (estimated number of rows for a table).

Syntax & Examples

First, let’s break down the core query (explained in detail later):

See also: Mastering the Linux Command Line — Your Complete Free Training Guide

-- Get estimated rows for a single table (public schema)

SELECT

relname AS table_name,

reltuples::bigint AS estimated_rows

FROM pg_class

WHERE relname = 'users' -- Replace with your table name

AND relkind = 'r'; -- 'r' = regular table (exclude indexes/views)

-- Output

table_name | estimated_rows

------------+----------------

users | 2500345

Example 1: List Estimated Rows for All Tables Across Schemas

If your database uses multiple schemas (e.g., public, analytics, archived), filter or group by schema to get a full overview.

SELECT

n.nspname AS schema_name,

c.relname AS table_name,

c.reltuples::bigint AS estimated_rows,

pg_size_pretty(pg_total_relation_size(c.oid)) AS total_size

FROM pg_class c

JOIN pg_namespace n ON n.oid = c.relnamespace

WHERE c.relkind = 'r' -- Regular tables only

ORDER BY estimated_rows DESC;

-- Output

schema_name | table_name | estimated_rows | total_size

-------------+-------------+----------------+------------

analytics | audit_log | 15000000 | 3256 MB

public | users | 2500345 | 617 MB

archived | 2024_orders | 892000 | 892 MB

Example 2: Compare Estimated vs. Actual Rows (Accuracy Check)

Validate how close reltuples is to the true row count by combining it with COUNT(*). This helps you decide if you need to run ANALYZE to refresh stats.

SELECT

'users' AS table_name,

reltuples::bigint AS estimated_rows,

(SELECT COUNT(*) FROM users) AS actual_rows,

ROUND(100.0 * reltuples / (SELECT COUNT(*) FROM users), 2) AS accuracy_pct

FROM pg_class

WHERE relname = 'users' AND relkind = 'r';

-- Output

table_name | estimated_rows | actual_rows | accuracy_pct

------------+----------------+-------------+--------------

users | 2500345 | 2500782 | 99.98

Example 3: Find Large Tables (Estimated Rows > 1 Million)

Identify tables that may need performance optimization (e.g., partitioning, indexing) using reltuples.

SELECT

n.nspname AS schema_name,

c.relname AS table_name,

c.reltuples::bigint AS estimated_rows,

pg_size_pretty(pg_total_relation_size(c.oid)) AS total_size

FROM pg_class c

JOIN pg_namespace n ON n.oid = c.relnamespace

WHERE c.relkind = 'r'

AND c.reltuples > 1000000 -- Filter tables with 1M+ rows

ORDER BY estimated_rows DESC;

Understanding reltuples::bigint AS estimated_rows

Let’s dissect this critical piece of the query:

c.reltuples: Thereltuplescolumn inpg_classstores the estimated row count as areal(single-precision float) value.::bigint: Converts the float to a 64-bit integer (eliminates decimals and avoids scientific notation for large numbers).AS estimated_rows: Renames the column for readability (emphasizes this is an estimate, not exact).

Why Convert to bigint?

-- Without conversion (real type: messy scientific notation)

SELECT reltuples FROM pg_class WHERE relname = 'large_table';

reltuples

-------------

1.50023e+07 -- Hard to read

-- With conversion (bigint: clean integer)

SELECT reltuples::bigint FROM pg_class WHERE relname = 'large_table';

reltuples

-----------

15002300 -- Easy to interpret

How reltuples Is Calculated

PostgreSQL does not update reltuples in real time (this would cripple performance for write-heavy tables). Instead:

reltuplesis updated byANALYZE,VACUUM, or auto-vacuum/auto-analyze (PostgreSQL’s background maintenance processes).- It’s based on statistical sampling (not a full table scan), so it’s an approximation.

Update reltuples Manually

If your estimate is outdated (e.g., after bulk inserts/deletes), refresh it with:

-- Update stats for a single table

ANALYZE users;

-- Update stats for all tables in the database

ANALYZE;

-- Clean dead tuples AND update stats (slower but thorough)

VACUUM ANALYZE users;

-- Verify when stats were last updated

SELECT

relname AS table_name,

last_analyze,

last_autoanalyze

FROM pg_stat_user_tables

WHERE relname = 'users';

-- Output

table_name | last_analyze | last_autoanalyze

------------+----------------------------+---------------------------

users | 2026-01-10 15:30:22.123+00 | 2026-01-12 03:15:45.678+00

Pros & Cons of reltuples

| Pros | Cons |

|---|---|

Blazing fast (0.1ms vs. seconds/minutes for COUNT(*)) | Not 100% accurate (error margin ~1-10% for well-maintained tables) |

| No table scan (reads only metadata) | Doesn’t work with filters, joins, or DISTINCT |

| No resource overhead | Outdated if ANALYZE/VACUUM hasn’t run recently |

3. Hybrid Approach: pg_stat_user_tables.n_live_tup

The pg_stat_user_tables view provides statistics about user tables, including n_live_tup (number of live rows, based on recent activity) and n_dead_tup (number of dead rows from deletes/updates). This is a middle ground between COUNT(*) (exact) and reltuples (fast estimate).

Syntax & Examples

Example 1: Basic Live/Dead Row Count

Track the health of a table by comparing live vs. dead rows—too many dead rows mean you need to run VACUUM.

SELECT

relname AS table_name,

n_live_tup AS live_rows,

n_dead_tup AS dead_rows,

ROUND(100.0 * n_dead_tup / NULLIF(n_live_tup + n_dead_tup, 0), 2) AS dead_row_pct

FROM pg_stat_user_tables

WHERE relname = 'orders';

-- Output

table_name | live_rows | dead_rows | dead_row_pct

------------+-----------+-----------+--------------

orders | 1850000 | 250000 | 11.90

Example 2: Identify Tables Needing VACUUM

Automatically flag tables with a high percentage of dead rows (e.g., >10% dead rows).

SELECT

relname AS table_name,

n_live_tup AS live_rows,

n_dead_tup AS dead_rows,

ROUND(100.0 * n_dead_tup / NULLIF(n_live_tup + n_dead_tup, 0), 2) AS dead_row_pct,

CASE

WHEN n_dead_tup / NULLIF(n_live_tup + n_dead_tup, 0) > 0.1 THEN '⚠️ NEEDS VACUUM'

ELSE '✅ HEALTHY'

END AS status

FROM pg_stat_user_tables

ORDER BY dead_row_pct DESC;

Example 3: Batch Check All Public Tables

Get a holistic view of all tables in the public schema, including their row counts and maintenance status.

SELECT

relname AS table_name,

n_live_tup AS live_rows,

n_dead_tup AS dead_rows,

last_vacuum,

last_analyze

FROM pg_stat_user_tables

WHERE schemaname = 'public'

ORDER BY live_rows DESC;

Key Notes

n_live_tupis updated byANALYZE/auto-analyze (same asreltuples).- It includes counts of live vs. dead rows (dead rows = deleted/updated rows not yet cleaned up by VACUUM).

- More reliable than

reltuplesfor tables with frequent writes (but still an estimate).

Performance Comparison

Let’s compare the three methods on a table with 15 million rows:

| Method | Execution Time | Accuracy | Use Case |

|---|---|---|---|

COUNT(*) | 15–20 seconds | 100% | Financial reports, critical business logic |

reltuples | 0.1 ms | ~99% | Quick checks, monitoring dashboards |

n_live_tup | 0.2 ms | ~98% | Tracking live vs. dead rows |

Best Practices for Counting Rows in PostgreSQL

Use COUNT(*) When:

- You need an exact row count (e.g., invoicing, compliance reports).

- The table is small (fewer than 100,000 rows).

- Precision is more important than speed.

Use reltuples When:

- You need a fast estimate (e.g., UI dashboards, capacity planning).

- The table is large (millions/billions of rows).

- A small margin of error is acceptable.

Use n_live_tup When:

- You need to track live vs. dead rows (e.g., identifying tables that need VACUUM).

- You want a balance of speed and moderate accuracy.

Additional Tips:

- Avoid

COUNT(1)Instead ofCOUNT(*): They perform identically in PostgreSQL—COUNT(*)is more readable and standard. - Index Optimization for

COUNT(*): If you use filters (e.g.,WHERE status = 'active'), create an index on the filtered column to speed upCOUNT(*).-- Create a partial index for faster counts of completed orders CREATE INDEX idx_orders_completed ON orders(status) WHERE status = 'completed'; - Schedule Regular ANALYZE: For accurate estimates, ensure auto-analyze is enabled (default in PostgreSQL) or run

ANALYZEafter bulk data changes.

Common Issues & Solutions

Issue 1: reltuples Is Way Off

Cause: ANALYZE/VACUUM hasn’t run after bulk inserts/deletes.

Fix: Run ANALYZE your_table; to refresh statistics.

-- Before ANALYZE: outdated estimate

SELECT reltuples::bigint FROM pg_class WHERE relname = 'orders'; -- 1000000

-- Bulk insert 850,000 rows

INSERT INTO orders SELECT * FROM orders_staging;

-- After insert: estimate still outdated

SELECT reltuples::bigint FROM pg_class WHERE relname = 'orders'; -- 1000000

-- Run ANALYZE to update stats

ANALYZE orders;

-- After ANALYZE: accurate estimate

SELECT reltuples::bigint FROM pg_class WHERE relname = 'orders'; -- 1850000

Issue 2: COUNT(*) Is Too Slow

Cause: Full table scan on a large table.

Fix 1: Use reltuples for estimates if precision isn’t critical.

Fix 2: Create a partial index for filtered counts.

-- Slow count without index

EXPLAIN ANALYZE SELECT COUNT(*) FROM orders WHERE status = 'completed'; -- 12 seconds

-- Create partial index

CREATE INDEX idx_orders_completed ON orders(status) WHERE status = 'completed';

-- Fast count with index

EXPLAIN ANALYZE SELECT COUNT(*) FROM orders WHERE status = 'completed'; -- 0.3 seconds

Issue 3: n_live_tup Shows More Dead Rows Than Live

Cause: The table has excessive dead tuples (uncleaned deletes/updates).

Fix: Run VACUUM ANALYZE your_table; to clean dead rows and update stats.

-- High dead row percentage

SELECT ROUND(100.0 * n_dead_tup / (n_live_tup + n_dead_tup), 2) AS dead_pct FROM pg_stat_user_tables WHERE relname = 'orders'; -- 45%

-- Clean dead rows and update stats

VACUUM ANALYZE orders;

-- Low dead row percentage after VACUUM

SELECT ROUND(100.0 * n_dead_tup / (n_live_tup + n_dead_tup), 2) AS dead_pct FROM pg_stat_user_tables WHERE relname = 'orders'; -- 2%

Summary

Counting rows in PostgreSQL is not a one-size-fits-all task—choose your method based on speed vs. precision:

- Exact counts: Use

COUNT(*)for critical, precise results (slow for large tables). - Fast estimates: Use

pg_class.reltuplesfor near-instant approximations (ideal for large tables). - Hybrid stats: Use

pg_stat_user_tables.n_live_tupto track live/dead rows with moderate accuracy.

Remember:

reltuplesandn_live_tuprely onANALYZEfor accuracy—refresh stats after bulk data changes.- Prioritize

COUNT(*)only when exactness is non-negotiable; otherwise, use estimates to save time and resources.

By understanding these methods, you can optimize row counting for performance and accuracy in any PostgreSQL workload.