Kubernetes, the orchestration system for managing containerized applications, offers a variety of service types to ensure your applications are accessible and communicate efficiently.

Each service type caters to specific use cases, balancing accessibility with resource allocation.

Let’s delve into the primary Kubernetes Service types—ClusterIP, NodePort, LoadBalancer, and cloud provider-specific LoadBalancers—to understand their use cases and implications for resource usage.

Table of Contents

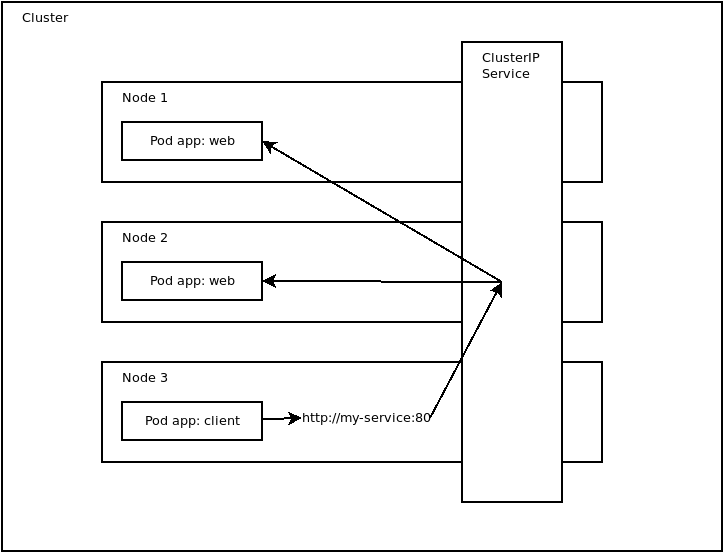

ClusterIP: The Default Choice for Internal Communication

Use Case: ClusterIP is the default Kubernetes Service type, designed primarily for internal communication between different application components within a cluster.

It’s ideal for applications that don’t require external access, such as backend services or databases that only need to communicate with other services within the same Kubernetes cluster.

Accessibility: Services of type ClusterIP receive an internal IP address in the cluster, making them reachable only from within the cluster. This encapsulation ensures internal services remain isolated from external access, enhancing security.

Kubernetes assigns a virtual IP address to a ClusterIP service that can solely be accessed from within the cluster during its creation.

Resource Allocation: ClusterIP services require minimal additional resources, as they leverage the cluster’s internal networking. They’re an efficient choice when external accessibility isn’t a requirement, conserving resources for other components.

Example

apiVersion: v1

kind: Service

metadata:

name: backend

spec:

selector:

app: backend

ports:

- name: http

port: 80

targetPort: 8080

Here are the port we use in the clusterip service.

See also: Mastering the Linux Command Line — Your Complete Free Training Guide

- ports:: This section defines the network ports that the Service will expose.

- name: http: Each port can be given a name, and in this case, it’s named http, which is a common convention for HTTP ports but not a technical requirement.

- port: 80: This is the port number that the Service will listen to. So, when other services or resources within the cluster need to communicate with the backend service, they will use port 80.

- targetPort: 8080: This specifies the port number on the Pods that the traffic will be forwarded to. The targetPort is the port on the Pod(s) that the Service routes traffic to. In this case, even though the Service is exposed on port 80, the actual application inside the Pods is listening on port 8080. The Service transparently forwards traffic from its own port (80) to the targetPort (8080) on the Pods.

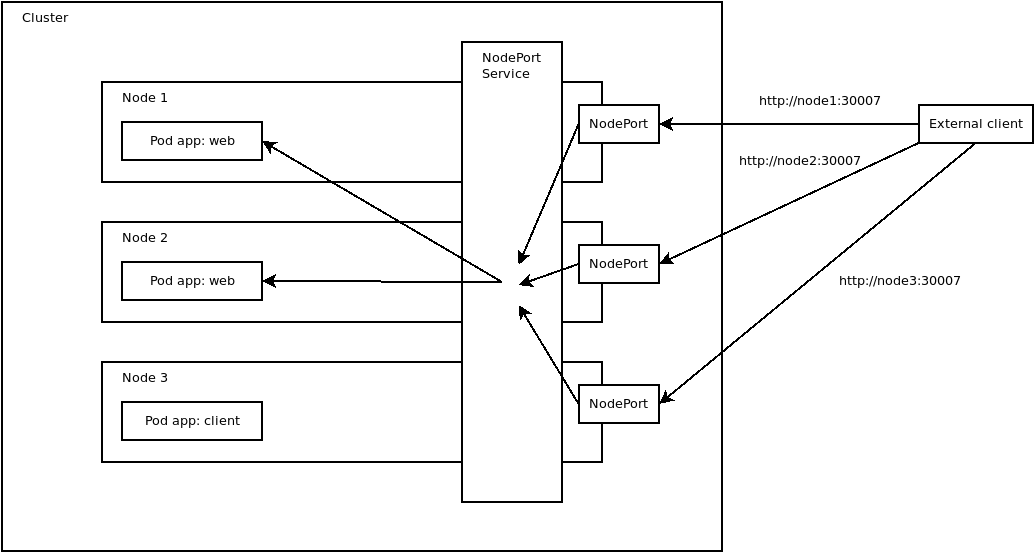

NodePort: Bridging Internal Services and the External World

Use Case: NodePort services are suited for scenarios requiring external access to your applications, such as exposing a web application or API to the outside world.

This service type is often used in development environments or small-scale production setups.

Kubernetes opens up a designated port that forwards traffic to the corresponding ClusterIP service running on the node.

Accessibility: A NodePort service makes your application accessible from outside the cluster by opening a specific port (a high-numbered port above 30000) on each of the cluster’s nodes. External users can access the service using any node’s IP address combined with the allocated high port number.

Resource Allocation: Compared to ClusterIP, NodePort services consume more resources since they require opening a network port on every node in the cluster. This broader accessibility may also necessitate additional security considerations.

Example:

apiVersion: v1

kind: Service

metadata:

name: frontend

spec:

selector:

app: frontend

type: NodePort

ports:

- name: http

port: 80

targetPort: 8080

nodePort: 30007The provided YAML snippet is a definition for a Kubernetes Service resource. This Service is configured to expose a set of Pods as a network service.

Below is a detailed explanation of each part of the Service definition:

- type: NodePort: This specifies the type of Service. NodePort exposes the Service on a static port (nodePort) on each node’s IP address. This type of Service makes it accessible from outside the cluster.

- ports:: This section defines the ports that the Service will expose.

- – name: http: This is an identifier for the port and is named http. Naming ports can be useful for readability and reference, especially when multiple ports are defined in a Service.

- port: 80: This is the port that the Service will listen to inside the cluster. Other Pods within the cluster can communicate with this Service on this port.

- targetPort: 8080: This specifies the port on the Pods that the traffic will be forwarded to. In this case, the Service forwards traffic to port 8080 on the Pods selected by the selector. This is the port where the application inside the Pod is listening.

- nodePort: 30007: Since this Service is of type NodePort, it’s accessible outside the cluster. The nodePort field specifies the port on each node’s IP where this Service will be exposed. External traffic coming to any node in the cluster on this port will be forwarded to this Service. Here, the chosen port is 30007.

In summary, this Service named frontend is designed to expose Pods labeled with app: frontend to external traffic. It listens on port 80 within the cluster but is accessible from outside the cluster on each node’s IP at port 30080, with traffic being forwarded to port 8080 on the selected Pods. This setup is typical for exposing a front-end web application or API to external users.

Pro tips:

A NodePort Service extends the functionality of a ClusterIP Service. This means when you establish a NodePort Service, Kubernetes simultaneously generates a corresponding ClusterIP Service. The workflow for an incoming request starts with the node capturing the request, which is then relayed by the NodePort Service to the ClusterIP Service, and subsequently directed to one of the underlying Pods (External Client -> Node -> NodePort -> ClusterIP -> Pod). An added advantage is the provision for internal clients to access these Pods more efficiently; they can bypass the NodePort layer entirely and connect directly to the ClusterIP, facilitating quicker access to the Pods.

LoadBalancer: Catering to High Traffic Volumes

Use Case: LoadBalancer services are tailored for production environments experiencing high traffic volumes. They’re commonly used when you need to distribute incoming traffic efficiently across multiple backend pods to ensure high availability and fault tolerance.

Accessibility: This service type integrates with external load balancers provided by cloud platforms or on-premise load balancer hardware, offering a single access point for external traffic to reach the application. The external load balancer routes incoming traffic to the appropriate node and, subsequently, to the service.

Resource Allocation: Utilizing a LoadBalancer service entails significant resource allocation, as it involves leveraging external load balancing hardware or cloud service provider resources. This can lead to higher costs but is often justified by the performance and reliability improvements in handling large traffic volumes.

Cloud Provider’s Load Balancer: Leveraging Cloud-Specific Features

Use Case: When running Kubernetes within a cloud provider’s environment, using the cloud provider’s specific LoadBalancer service can offer enhanced performance, reliability, and integration with the cloud ecosystem.

Accessibility: Similar to the generic LoadBalancer service type, cloud provider-specific LoadBalancers expose your service externally through the cloud provider’s load balancing infrastructure. This seamless integration often provides additional features like automated health checks, SSL termination, and more sophisticated traffic distribution.

Resource Allocation: Opting for a cloud provider’s LoadBalancer can result in cost savings and performance benefits due to the tight integration with the cloud provider’s infrastructure. The actual resource allocation and costs will vary depending on the provider and the service configuration.

Conclusion

Choosing the right Kubernetes Service type is pivotal in designing efficient, secure, and scalable applications.

Whether you need internal communication with ClusterIP, external accessibility with NodePort, high-traffic handling with LoadBalancer, or cloud-specific advantages with a cloud provider’s LoadBalancer, Kubernetes offers a solution tailored to your needs.

Balancing the requirements of accessibility and resource allocation will guide you in selecting the most appropriate service type for your application.